In Short

- The meta oversight board is addressing nonconsensual deepfake pornography, particularly targeting celebrities.

- Instances involving deepfake porn were brought to the board’s attention, prompting action to regulate such content on social media platforms.

- The board’s efforts highlight the challenges in detecting and removing nonconsensual deepfake content, emphasizing the need for stronger regulations and accountability.

- Celebrities, often victims of such malicious content, have become a focal point in the discussion around digital privacy and security.

- The board’s investigations shed light on the evolving landscape of online threats and the measures needed to protect individuals’ privacy and dignity.

TFD – Dive into the world of nonconsensual deepfake pornography as the Meta Oversight Board steps up to address this concerning trend. Learn how celebrities are targeted, the challenges faced by social media platforms, and the urgent need for accountability in the digital age. Stay ahead of digital threats and safeguard online privacy.

As AI tools become increasingly sophisticated and accessible, so too has one of their worst applications: non-consensual deepfake pornography. While much of this content is hosted on dedicated sites, more and more it’s finding its way onto social platforms. Today, the Meta Oversight Board announced that it was taking on cases that could force the company to reckon with how it deals with deepfake porn.

The board will concentrate on two deepfake porn instances, both involving celebrities whose photos were manipulated to create sexual content. The board is an independent entity that has the authority to make recommendations to Meta as well as binding rulings. Deepfake pornography featuring an unidentified American celebrity was taken down from Facebook in one instance, even though it had already been reported elsewhere on the social media site. The post was also added to Meta’s Media Matching Service Bank, an automated system that finds and removes images that have already been flagged as violating Meta’s policies, to keep it off the platform.

In the other instance, despite users reporting a deepfake image of an unidentified Indian celebrity for breaking Meta’s pornographic regulations, it stayed up on Instagram. The notification stated that as soon as the board took up the case, the deepfake of the Indian celebrity was taken down.

Although the photographs did not fall within Meta’s porn restrictions, they were removed in both cases for breaking the company’s anti-bullying and harassment policy. However, porn or sexually graphic advertisements are not permitted on Meta’s platforms, and the company forbids “content that depicts, threatens or promotes sexual violence, sexual assault or sexual exploitation.” In a blog post published in conjunction with the cases’ announcement, Meta stated that it had removed the posts because they had violated both its adult nudity and sexual activity policy as well as the section of its bullying and harassment policy that prohibits “derogatory sexualized photoshops or drawings.”

According to Julie Owono, an Oversight Board member, the board intends to utilize these incidents to investigate Meta’s policies and procedures for identifying and eliminating nonconsensual deepfake pornography. She states, “I can already say, very tentatively, that detection is probably the main issue.” “Detection is not as good as we would like it to be, or at least not as efficient.”

For a long time, Meta has been under fire for the way it modifies content from sources outside of the US and Western Europe. In this instance, the board has already expressed concerns about the fact that the Indian and American celebrities were treated differently once their deepfakes surfaced on the site.

“In certain markets and languages, Meta is known to moderate content more quickly and efficiently than in others. We aim to determine whether Meta is fairly safeguarding women worldwide by examining two cases—one from the United States and one from India,” says Helle Thorning-Schmidt, co-chair of the Oversight Board. “It’s critical that this matter is addressed, and the board looks forward to exploring whether Meta’s policies and enforcement practices are effective at addressing this problem.”

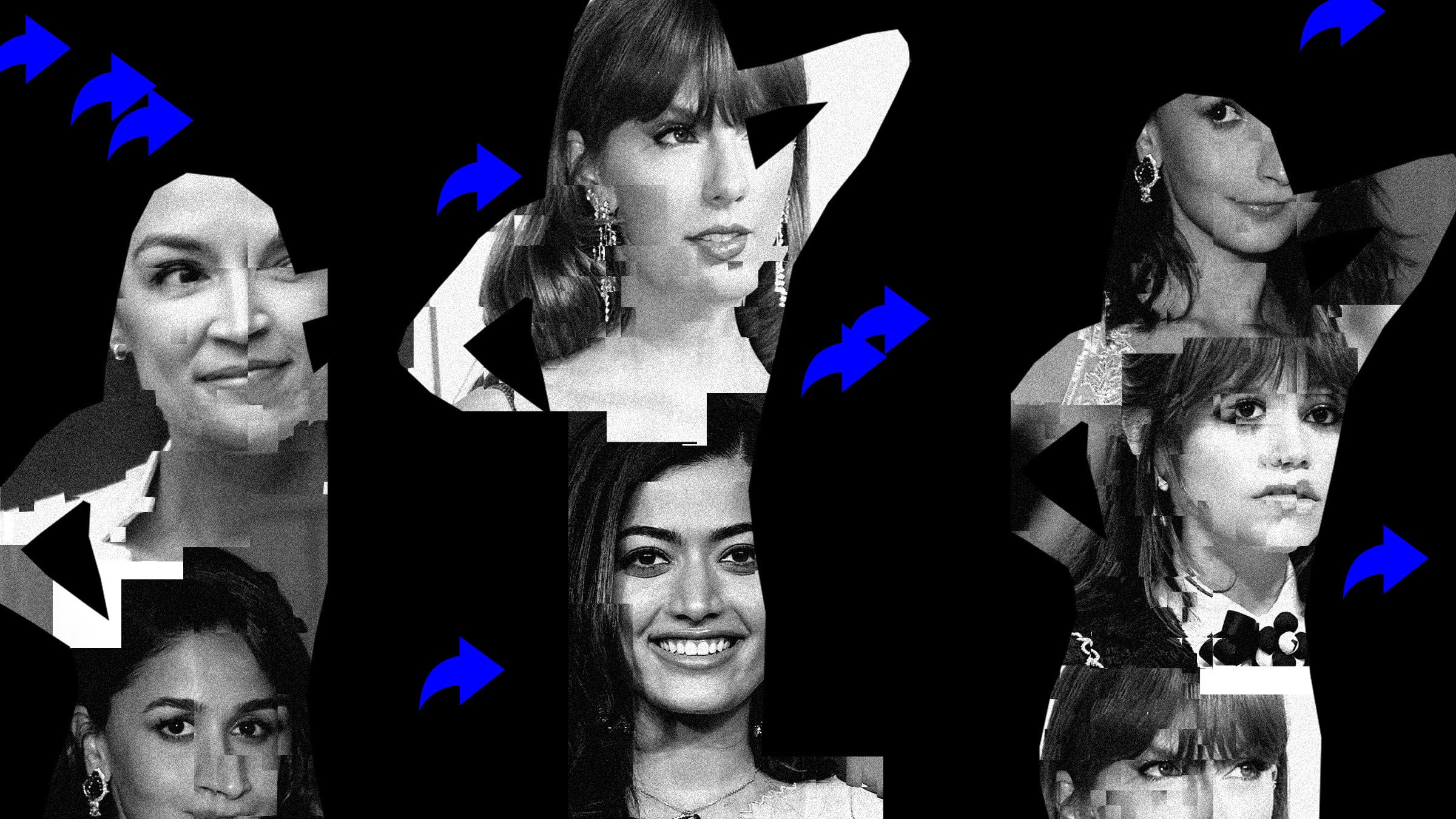

The board declined to name the Indian and American celebrities whose images spurred the complaints, but pornographic deepfakes of celebrities have become rampant. A recent Channel 4 investigation found deepfakes of more than 4,000 celebrities. In January, a nonconsensual deepfake of Taylor Swift went viral on Facebook, Instagram, and especially X, where one post garnered more than 45 million views. X resorted to restricting the singer’s name from its search function, but posts continued to circulate. And while platforms struggled to remove that content, it was Swift’s fans who reportedly took to reporting and blocking the accounts that shared the image. In March, NBC News reported that ads for a deepfake app that ran on Facebook and Instagram featured the images of an undressed, underaged Jenna Ortega. In India, deepfakes have targeted major Bollywood actresses including Priyanka Chopra Jonas, Alia Bhatt, and Rashmika Mandann.

Research has shown that nonconsensual deepfake pornography has been primarily directed towards women since the phenomenon first surfaced five years ago, and it has only been growing since then. More movies than ever before were posted to the top 35 deepfake porn hosting websites last year, according to a WIRED investigation. Additionally, making a deepfake doesn’t require much. VICE discovered in 2019 that a dependable deepfake can be produced in as little as 15 seconds of an Instagram story, and the technology has only become more widely available. Five pupils from a Beverly Hills school were expelled last month for making nonconsensual deepfakes of sixteen of their peers.

Thorning-Schmidt states, “Deepfake pornography is increasingly used to target, silence, and intimidate women on- and offline. It is a growing cause of gender-based harassment online.” Numerous studies demonstrate that women are the primary target audience for deepfake pornography. The instruments used to create it are growing more advanced and available, and the content itself has the potential to cause great harm to victims.

The Disrupt Explicit Forged Images and Non-Consensual Edits, or DEFIANCE Act, was introduced by lawmakers in January. If someone could prove that their image was used in deepfake porn, they would be able to file a lawsuit. The bill’s sponsor, Congresswoman Alexandria Ocasio-Cortez, was the victim of deepfake pornography earlier this year.

At the time, Ocasio-Cortez released a statement saying, “Victims of nonconsensual pornographic deepfakes have waited too long for federal legislation to hold perpetrators accountable.” “Congress needs to act to show victims that they won’t be left behind as deepfakes become easier to access and create—96 percent of deepfake videos circulating online are nonconsensual pornography.

Conclusion

The battle against nonconsensual deepfake pornography is a critical one in the digital age. With the Meta Oversight Board taking action, there is hope for stronger regulations and accountability in addressing this malicious content. Let’s work together to protect individuals, especially celebrities, from the harms of nonconsensual deepfake videos. Remember, safeguarding digital privacy and security is everyone’s responsibility.

Connect with us for the Latest, Current, and Breaking News news updates and videos from thefoxdaily.com. The most recent news in the United States, around the world , in business, opinion, technology, politics, and sports, follow Thefoxdaily on X, Facebook, and Instagram .