The entire day of Google’s developer keynote was devoted to AI. The business unveiled many machine intelligence enhancements for Android, including with new chatbot tools driven by AI and enhanced search capabilities.

TFD – Dive into Google’s revolutionary SynthID watermarking tool, combating deepfakes and misinformation in the digital realm.

In Short

- Google introduces SynthID, a watermarking tool against deepfakes and false information.

- The tool leaves an undetectable watermark for identification.

- It expands to scan content on Gemini, web, and Veo-generated videos.

Google kicked off its annual I/O developer conference today. The company typically uses the Google I/O keynote to announce an array of new software updates and the occasional hunk of hardware. There was no hardware at this year’s I/O—Google had already announced its new Pixel 8A phone—but today’s presentation was a resplendent onslaught of AI software updates and a reflection of how Google aims to assert dominance over the generative AI boom of the past couple years.

The main I/O 2024 announcements are listed below.

| Announcement | Description |

|---|---|

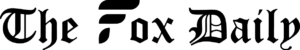

| Gemini 1.5 Flash and 1.5 Pro | Google introduced Gemini 1.5 Flash, a lighter-weight model designed for fast and efficient serving at scale. It’s the fastest Gemini model served in the API. Additionally, they significantly improved Gemini 1.5 Pro, their best model for general performance across various tasks. Both models are available in public preview on Google AI Studio and Vertex AI. |

| Project Astra | Google shared their vision for the future of AI assistants with Project Astra. |

| Trillium TPU | The sixth generation of Google’s custom AI accelerator, the Tensor Processing Unit (TPU), was announced. Trillium TPUs achieve a 4.7x increase in peak compute performance per chip compared to TPU v5e and are over 67% more energy-efficient. |

| Audio Overviews for NotebookLM | Google demoed an early prototype of Audio Overviews, which creates personalized verbal discussions based on uploaded materials. |

| Grounding with Google Search | This tool, which connects the Gemini model with world knowledge and up-to-date information on the internet, is now generally available on Vertex AI. |

| Audio Understanding in Gemini API | Gemini 1.5 Pro can now reason across image and audio for videos uploaded in AI Studio. |

| Imagen 3 | Google unveiled Imagen 3, their highest-quality image generation model. It understands natural language and generates photorealistic, lifelike images with fewer visual artifacts. Imagen 3 is also excellent at rendering text, a challenge for image generation models. |

Google’s on-device mobile big language model, Gemini Nano, is receiving support. With multimodality, which Google CEO Sundar Pichai claimed on stage allows it to “turn any input into any output,” it will now be known as the Gemini Nano. This implies that it can extract data from text, images, audio, web or social media videos, and live video captured by your phone’s camera, then combine that data to create a summary or respond to any queries you may have. In a Google video, this was demonstrated by someone using a camera to scan every book on a shelf and enter the titles in a database so that the books could be recognized later.

Google’s more robust cloud-based AI technology, Gemini 1.5 Pro, is now accessible to all developers worldwide. Read Will Knight’s WIRED interview with Demis Hassabis, cofounder of Google’s DeepMind, to learn more about the company’s AI goals.

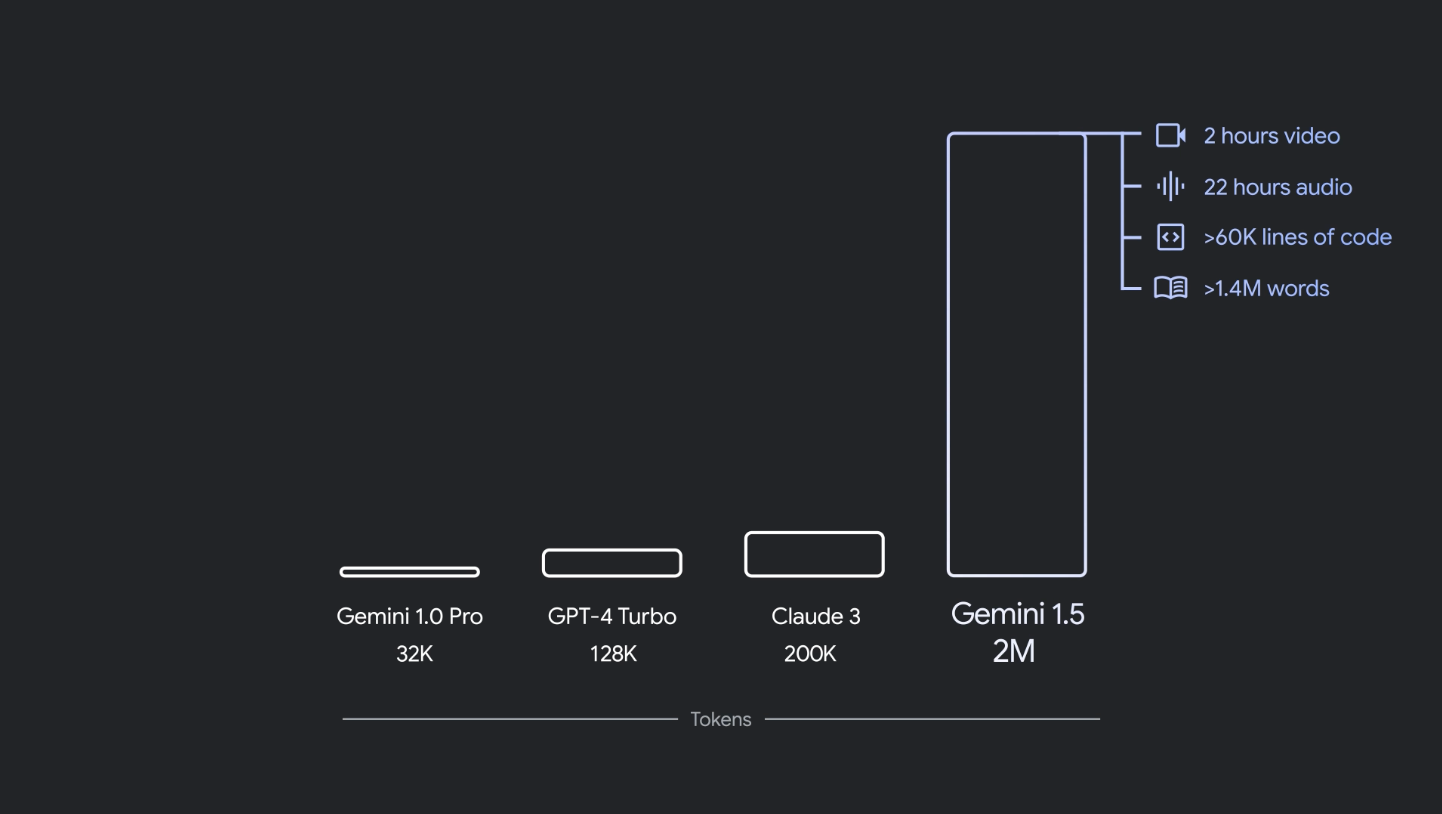

Google Photos comes with some powerful visual search features built in. You can now ask Gemini to search your photographs and provide more precise results than ever before with a new feature called Ask photographs. As an illustration, provide it with your license plate number, and it will utilize context cues to identify your vehicle in every photo you have ever taken.

Jerem Selier, a software engineer at Google, claims in a blog post that the functionality (apart from what’s being utilized in Google photographs) doesn’t gather data on your photographs that may be used to display adverts or train its other Gemini AI models. Ask Photos will launch in the summer.

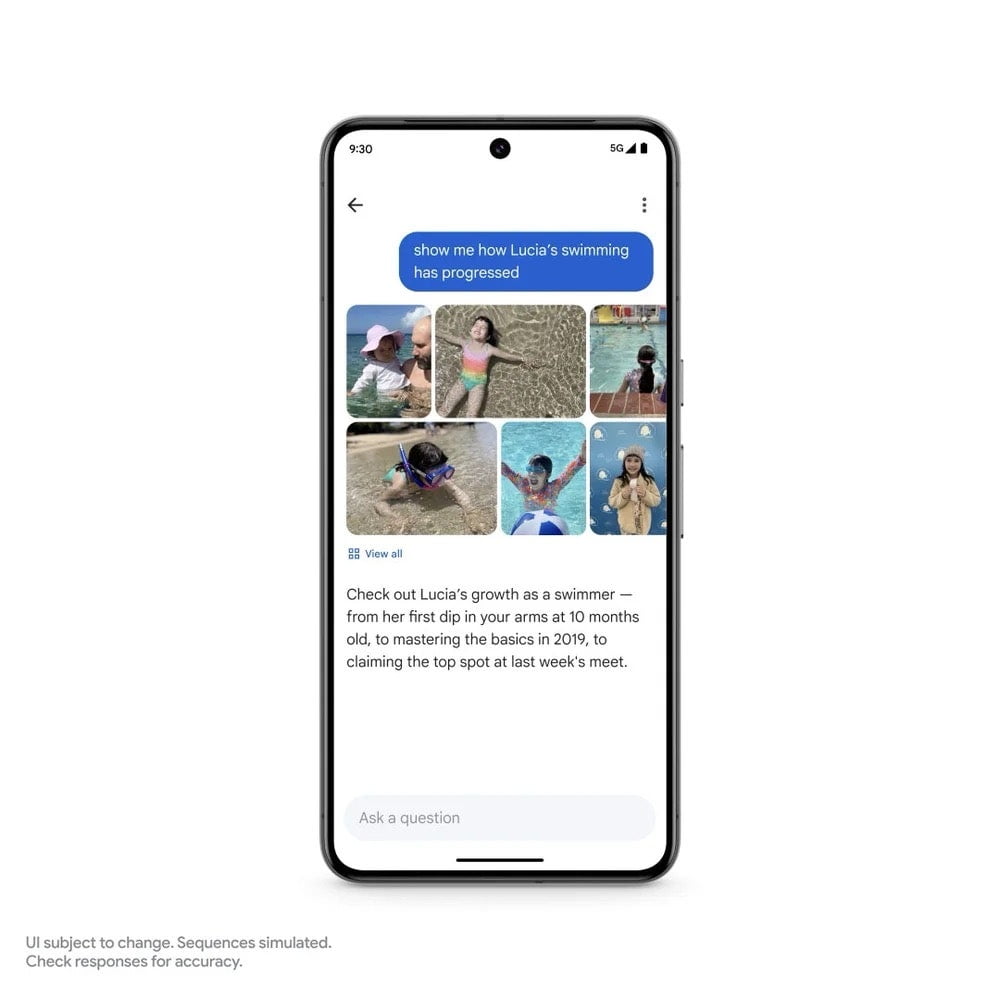

Additionally, Google is incorporating AI into its Workplace office toolkit. Many Google products, such as Gmail, Google Drive, Docs, Sheets, and Slides, will have a button in the side panel to toggle Google’s Gemini AI as of right now. The Gemini assistant can assist with answering inquiries, writing emails or documents, and summarizing lengthy emails or documents.

Lest you think this stuff is all about office work, Google showed off a few features that will appeal to parents, like AI chatbots that can help students with their homework or provide a summary of the PTA meetings you may have missed. Google’s Circle to Search, which made its debut earlier this year, is likewise undergoing an update and will soon be utilized to provide kids with academic assistance, such as a breakdown of math difficulties.

An AI Teammate with Gemini capabilities is also integrated into programs like Docs and Gmail. This might be referred to as your very own productivity companion at work. (It was called Chip for the demo’s purposes today.) With the aid of the AI Teammate, you can create to-do lists, maintain track of project files, better organize team discussions, and monitor assignment completion. It resembles a Slackbot with more power.

Gems, a new feature that lets you set up automated routines for tasks you want Gemini to perform on a regular basis, was also demonstrated to us. It can be configured to perform a variety of digital tasks when given a voice command or a text prompt. Google calls each of those routines “Gems” as a play on the Gemini name.

To get a deeper look at all the exciting things coming to Gemini on Android, read Julian Chokkattu’s tale. Soon, more information regarding Workspace integrations and AI Teammate will be available.

Google’s Gemini AI features two new models that are tailored to different kinds of activities. The quicker, lower latency Gemini 1.5 Flash is designed for applications where speed is important.

Project Astra is a visual chatbot, and sort of a souped-up version of Google Lens. It lets users open their phone cameras and ask questions about just about anything around them by pointing the camera at things. Google showed off a video demo where somebody asked Astra a variety of questions in a row based on their surroundings. Astra has a better spatial and contextual understanding, which Google says lets users identify things out in the world like what town they are in, the inner workings of some code on a computer screen, or even coming up with a clever band name for your dog. The demo showed Astra’s voice-powered interactions working through a phone’s camera as well as a camera embedded in some (unidentified) smart glasses.

In his earlier today news piece about Project Astra, Will Knight delves deeper.

Google acknowledged the artistic aspect of its AI endeavors by showcasing a range of tool demos created by Google Labs, the company’s experimental AI branch.

A generative video model called VideoFX, which is based on Google DeepMind’s Veo video generator, is the latest thing. Based on text prompts, it produces 1080p films and offers greater production process flexibility than previously. Google has also improved ImageFX, a high-resolution image generator that Google says has fewer issues with creating unwanted digital artifacts in pictures than its previous image generation. It is also better at analyzing a user’s prompts and generating text.

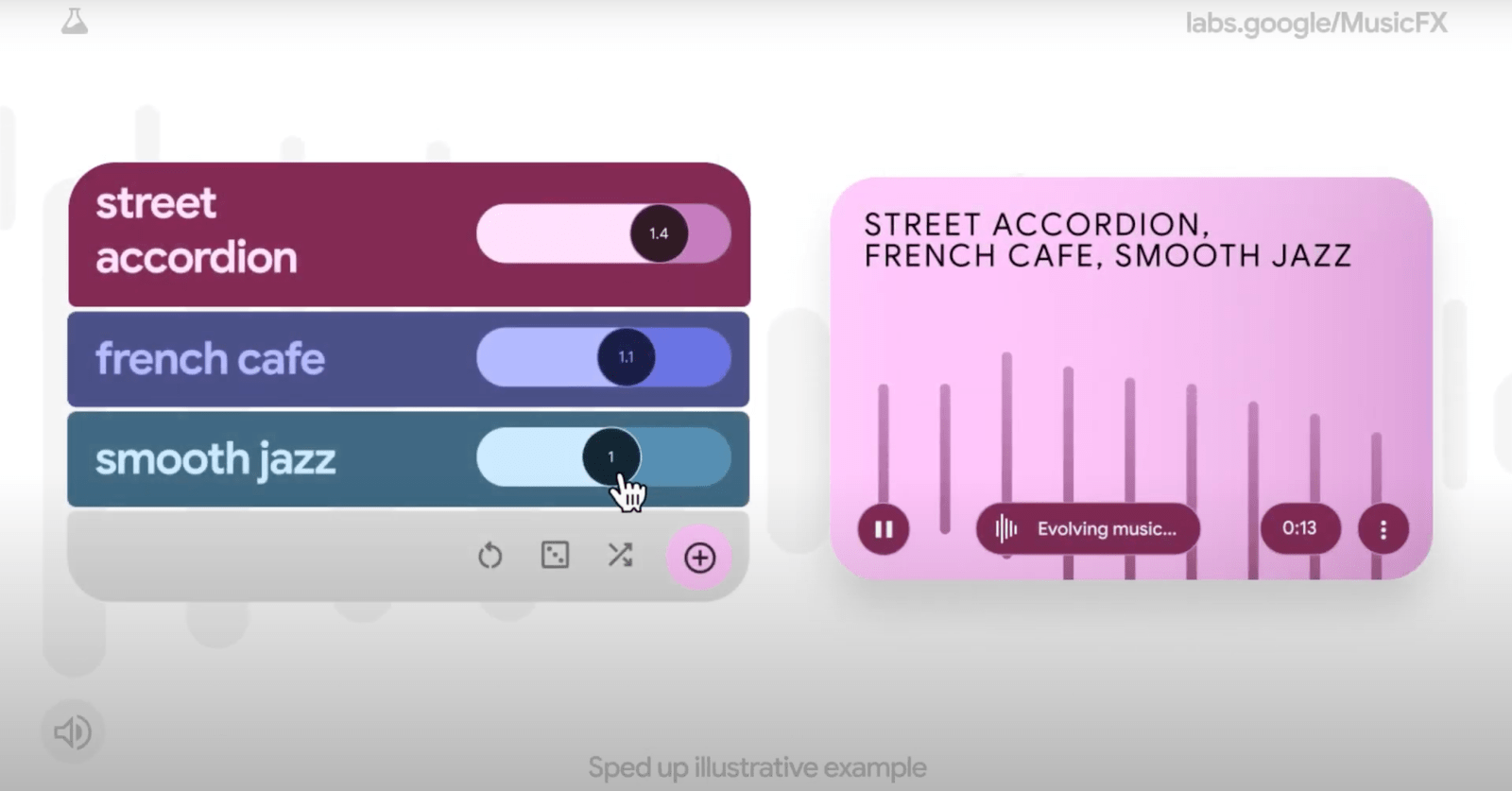

Additionally, Google unveiled MusicFX’s new DJ Mode, an AI music generator that allows artists to create song loops and samples in response to commands. (Mark Rebillet’s quirky and entertaining performance, which preceded the I/O keynote, featured DJ mode.)

Even with some excellent, marginally more private alternatives, Google remains the dominant force in the search market, having started off as a small search-focused startup. The most recent AI changes from Google have drastically changed its main product.

Some of the new features are the ability to obtain better results from longer queries and searches with photographs, as well as AI-organized search, which enables more succinctly shown and readable search results.

AI overviews, which are condensed summaries that combine data from several sources to respond to the query you typed into the search box, were also visible to us. You can find the answers you’re looking for without ever visiting a website thanks to these summaries, which show up at the top of the results. These summaries are already contentious because publishers and websites worry that a Google search that provides answers without requiring users to click on links might be disastrous for websites that already have to go to great pains to be in Google’s search results. Nevertheless, as of right now, everyone in the US can see these substantially improved AI overviews.

When you’re looking for items with some contextual complexity, a new function called Multi-Step Reasoning allows you to find multiple layers of knowledge about a topic. Google demonstrated how searching in Maps can help identify hotels and arrange transit itineraries using the example of planning a trip. After that, it offered restaurant recommendations and assisted with organizing the trip’s meals. You can narrow down your search by focusing on vegetarian or specialty food selections. You are presented with this information in an orderly fashion.

Finally, a brief demonstration was shown to us showing how users can rely on Google Lens to provide information about everything they aim their camera at. (Yes, this sounds a lot like Project Astra, but Lens is integrating these features in a slightly different method.) In the demo, a lady was attempting to fix a purportedly broken turntable. However, Google determined that the record player’s tonearm only needed to be adjusted, and it gave her a couple alternatives for text- and video-based guidance on how to accomplish so. Through the camera, it was even able to accurately identify the turntable’s brand and model.

The head of Google Search, Liz Reid, spoke with WIRED’s Lauren Goode about all the AI changes coming to Google Search and their implications for the internet at large.

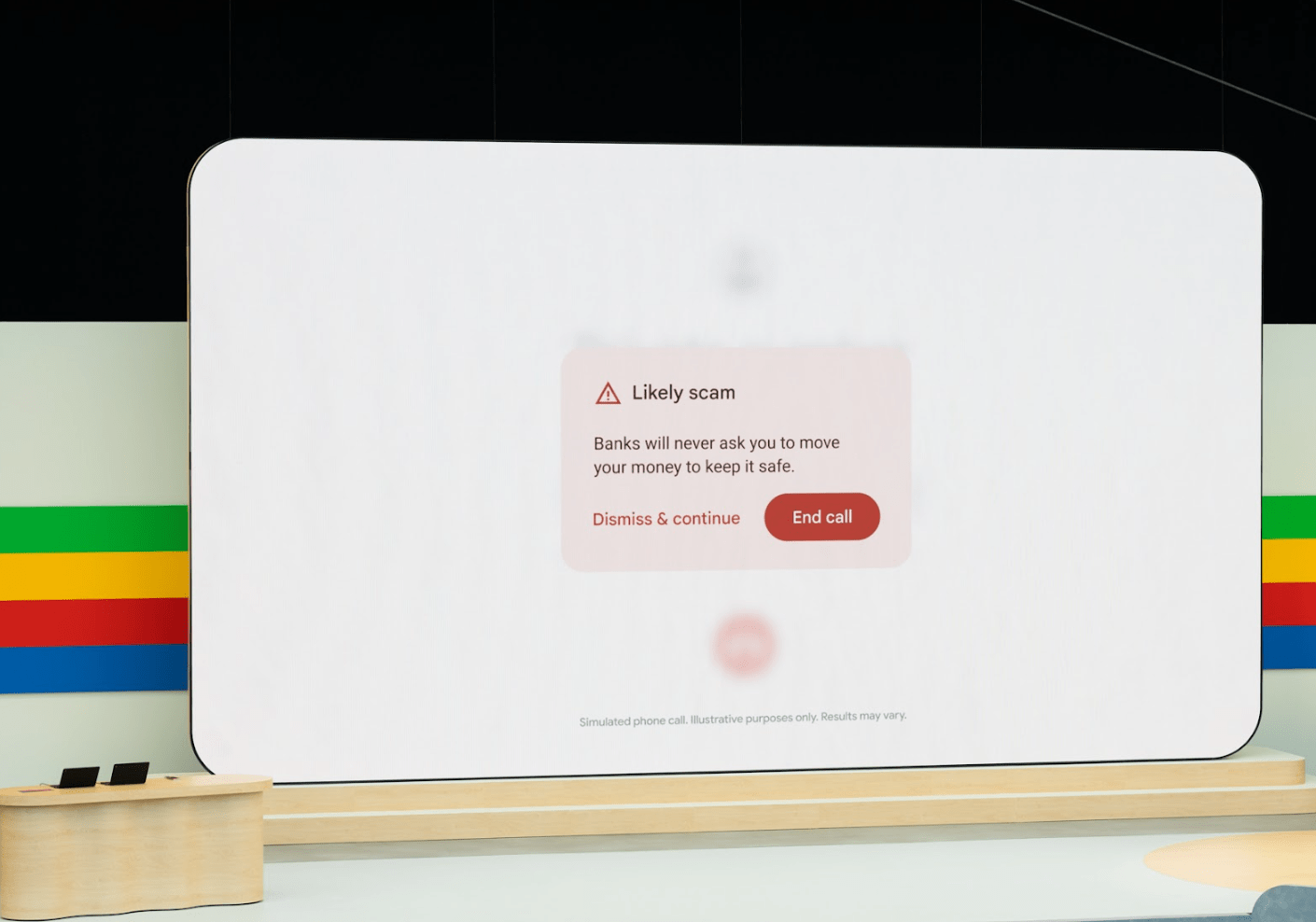

A new scam detection function for Android, which can listen in on your phone calls and identify any language that seems like something a scammer would use, such requesting you to move money into a different account, was one of the last notable things we saw during the keynote. It will end the call and display an onscreen prompt advising that you hang up if it detects you are being tricked. According to Google, the feature is more private because it operates on the device rather than sending your phone calls to a cloud for analysis. (Also see WIRED’s article on defending against AI scam calls for yourself and your family.)

Google has further developed its SynthID watermarking tool, which is designed to identify artificial intelligence-generated media. This can assist you in identifying false information, phishing spam, and deepfakes. The tool leaves an imperceptible watermark that can’t be seen with the naked eye, but can be detected by software that analyzes the pixel-level data in an image. The new updates have expanded the feature to scan content on the Gemini app, on the web, and in Veo-generated videos. Google says it plans to release SynthID as an open source tool later this summer.

Connect with us for the Latest, Current, and Breaking News news updates and videos from thefoxdaily.com. The most recent news in the United States, around the world , in business, opinion, technology, politics, and sports, follow Thefoxdaily on X, Facebook, and Instagram .