OpenAI, the San Francisco-based artificial intelligence giant behind chatgpt, is once again in the headlines — not for a new model release, but for an escalating legal and ethical controversy. The company is being accused of using intimidation tactics and legal pressure to silence critics and shape the conversation surrounding California’s Transparency in Frontier Artificial Intelligence Act (SB 53).

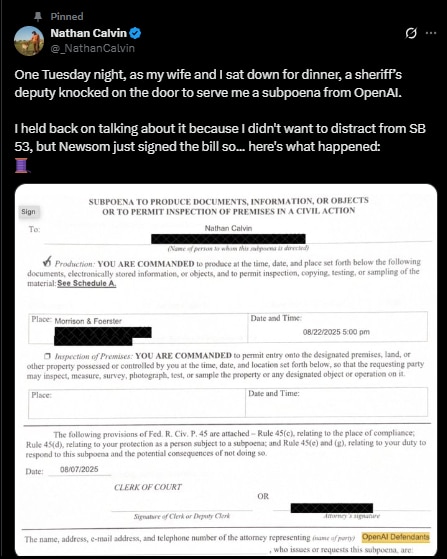

The dispute erupted after Nathan Calvin, a 29-year-old lawyer and general counsel for the nonprofit Encode, published a viral thread on X (formerly Twitter) on Friday. Calvin alleged that OpenAI attempted to undermine the AI transparency bill through aggressive legal maneuvers, even invoking its ongoing lawsuit against Elon Musk to discredit critics like Encode by falsely implying they were secretly funded by Musk.

“OpenAI intimidated their critics and implied that Elon is behind all of them using the pretext of their lawsuit against Musk,” Calvin wrote in his post. “Mogul does not fund us.”

A viral thread sparks a storm of reactions

The controversy traces back to August, when Encode — a small nonprofit with just three full-time employees — received an unexpected subpoena. Calvin recounted that as he sat down for dinner with his wife, a sheriff’s officer arrived at his doorstep with legal papers demanding that he and Encode hand over all communications relating to OpenAI’s governance, investors, and AI policy work, including private discussions about the SB 53 bill.

“Having a half-trillion-dollar company target you was terrifying,” said Sunny Gandhi, Encode’s Vice President of Political Affairs, in a statement to The San Francisco Standard.

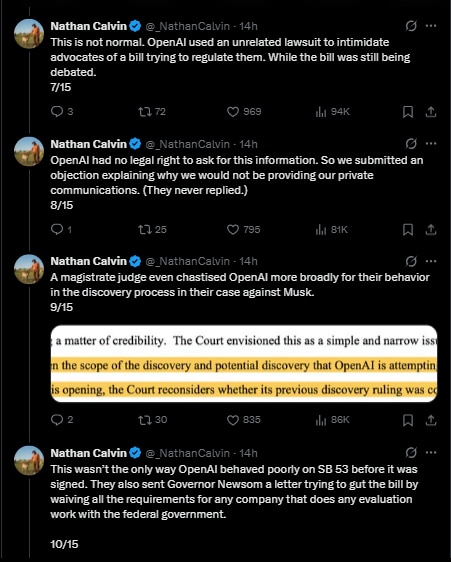

Calvin argued that the subpoena served as a clear act of legal intimidation rather than legitimate discovery. Others in the AI accountability space agree. Tyler Johnston, who runs the AI watchdog Midas Project, said he faced a similar incident in Oklahoma — when an officer arrived with a subpoena demanding all records “in the broadest sense permitted” about OpenAI. Johnston posted on X, “If they had just asked if I’m funded by Musk, I would’ve said, ‘Man, I wish,’ and called it a day.”

Community backlash grows against OpenAI

The uproar prompted responses from several members of the AI ethics community and even current and former OpenAI staff. Joshua Achiam, OpenAI’s Head of Purpose Alignment, admitted that “this doesn’t seem great” and that speaking out “could risk his entire career.” Meanwhile, Helen Toner — a former board member who previously raised concerns about OpenAI’s direction — wrote that while the company produces valuable work, “the dishonesty and intimidation tactics in their policy operations are really not acceptable.”

OpenAI’s official response

In response to the growing backlash, Jason Kwon, OpenAI’s Chief Strategy Officer, issued a statement defending the company’s actions. He argued that Encode’s possible connection to Elon Musk’s lawsuit against OpenAI “raises legitimate questions,” adding that issuing subpoenas was “standard practice in litigation.” Kwon further accused critics of “spinning a narrative that makes it sound like something it wasn’t,” according to Fortune.

However, Calvin rejected that defense. He told Fortune that during negotiations around the AI transparency bill SB 53, OpenAI lobbied to dilute key provisions designed to enforce accountability. Governor Gavin Newsom ultimately signed the bill into law in September, mandating that companies developing advanced AI systems must file risk assessments, report safety incidents, and disclose core safety practices to the state government.

Inside the Encode vs OpenAI standoff

According to Calvin, OpenAI lobbied Governor Newsom’s administration to classify companies as compliant if they participated in international or federal AI safety frameworks — a move Calvin says would have created a loophole shielding major corporations like OpenAI from state-level scrutiny.

“I didn’t want this to become a story about Encode versus OpenAI,” Calvin emphasized. “I wanted it to be about the merits of the bill.” However, he decided to speak publicly after hearing Chris Lehane, OpenAI’s Global Affairs Chief, describe the company’s lobbying as an effort to “improve” SB 53. “That felt completely inconsistent with my experience,” Calvin remarked.

Founded in 2020 by Sneha Revanur — who was just 15 years old at the time — Encode has refused to comply with the subpoena, maintaining it has no affiliation with Elon Musk or his ventures. So far, OpenAI has not followed up with any additional legal action.

Meanwhile, some within OpenAI are calling for internal reflection. Achiam urged colleagues to “engage more constructively” with policy critics, adding, “We can’t act in ways that make us look like a fearful corporate power instead of a force for public good. We owe a responsibility to humanity.”

“The most stressful period of my professional life”

For Calvin, the ordeal has been emotionally draining. “This has been the most stressful period of my professional life,” he admitted, while clarifying that he is not anti-OpenAI. “I use their products. Their safety research often deserves real credit,” he said. “I just want to see the version of OpenAI that genuinely strives to make the world a better place.”

He concluded with a pointed question now widely shared across social media: “Does anyone truly believe that these actions align with OpenAI’s nonprofit mission to ensure AGI benefits all of humanity?”

The unfolding tension between OpenAI and AI transparency advocates like Encode has reignited the debate about corporate accountability, ethical governance, and the future of AI regulation in the United States.

For breaking news and live news updates, like us on Facebook or follow us on Twitter and Instagram. Read more on Latest Technology on thefoxdaily.com.

COMMENTS 0