OpenAI has officially returned to the open-source ecosystem with the release of two advanced Artificial Intelligence models: gpt-oss-120b and gpt-oss-20b. This marks the San Francisco-based AI company’s first major open-weight release since GPT-2 was open-sourced in 2019.

According to the company, both models deliver performance levels comparable to its proprietary o3 and o3-mini systems. Built using a mixture-of-experts (MoE) architecture, the models are designed for efficiency, reasoning transparency, and compatibility with agentic workflows. OpenAI has also emphasized that these models have undergone rigorous safety training and evaluation before public release.

The open weights are now available for download via OpenAI’s official listing on Hugging Face, enabling researchers and developers to run the models locally.

Native Reasoning and Chain-of-Thought Transparency

In a post on X (formerly Twitter), OpenAI CEO Sam Altman highlighted the capability of the larger model, stating that “gpt-oss-120b performs about as well as o3 on challenging health issues.”

One of the defining features of the gpt-oss models is their support for transparent chain-of-thought (CoT) reasoning. Unlike many earlier systems where reasoning steps were hidden, these models allow for adjustable reasoning depth — enabling users to optimize for either low-latency outputs or higher-quality, detailed responses.

OpenAI states that both models are compatible with its Responses API and can be integrated into agentic workflows. They natively support tool utilization, including:

- Web search

- Python code execution

- Structured tool calling

This makes them suitable for real-world Automation, coding environments, research assistance, and AI agent development.

Architecture and Technical Specifications

The two models are built on a mixture-of-experts (MoE) framework, which activates only a subset of parameters per token to improve computational efficiency.

- gpt-oss-120b: 117 billion total parameters, activates 5.1 billion parameters per token

- gpt-oss-20b: 21 billion total parameters, activates 3.6 billion parameters per token

- Context length: 128,000 tokens for both models

The MoE design allows these large-scale models to balance high performance with manageable inference costs, making them more accessible for local and enterprise deployment.

Training Data and Reinforcement Learning Fine-Tuning

OpenAI confirmed that the majority of the training datasets used for these models consist of English-language text. The company focused heavily on domains such as:

- Science, Technology, Engineering, and Mathematics (STEM)

- Coding and software development

- General knowledge and problem solving

During post-training, OpenAI applied reinforcement learning (RL)-based fine-tuning to enhance reasoning quality, reliability, and alignment with intended usage guidelines.

Benchmark Performance Compared to o3 and o3-Mini

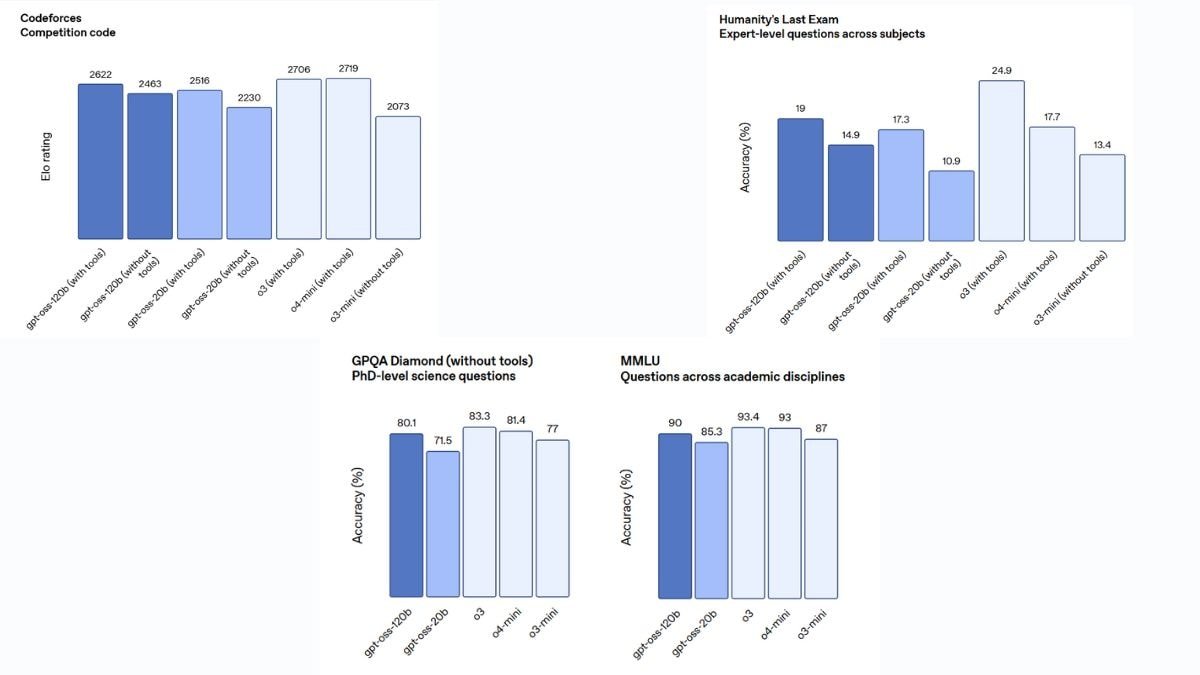

Based on OpenAI’s internal benchmark testing, gpt-oss-120b demonstrates strong competitive performance:

- Outperforms o3-mini in competition coding (Codeforces)

- Exceeds o3-mini in general problem solving benchmarks such as MMLU and Humanity’s Last Exam

- Shows strong performance in tool-calling evaluations like TauBench

However, OpenAI acknowledges that the open-source models slightly trail behind o3 and o3-mini on certain advanced reasoning benchmarks, including GPQA Diamond.

Safety Measures and Risk Mitigation

OpenAI states that extensive safety protocols were implemented throughout development. During pre-training, the company filtered out harmful information related to chemical, biological, radiological, and nuclear (CBRN) hazards.

Additionally, the models were trained to resist prompt injection attacks and to reject harmful or malicious instructions. According to OpenAI, even though the models are released with open weights, they have been engineered to minimize the risk of being easily modified for harmful outputs.

The company asserts that safety alignment remains intact despite the open-source nature of the release.

A Strategic Return to Open Source

The release of gpt-oss-120b and gpt-oss-20b signals OpenAI’s renewed engagement with the open research community. By combining transparent reasoning, tool integration, and efficient MoE scaling, the company appears to be positioning these models as robust alternatives for researchers, startups, and enterprises seeking high-performance AI without relying solely on proprietary APIs.

As the AI landscape grows increasingly competitive, OpenAI’s open-weight strategy may reshape how developers balance transparency, safety, and cutting-edge performance in next-generation AI systems.

For breaking news and live news updates, like us on Facebook or follow us on Twitter and Instagram. Read more on Latest Technology on thefoxdaily.com.

COMMENTS 0