Two open-source artificial intelligence (AI) models were made available by OpenAI on Tuesday. Since GPT-2 was made publicly available in 2019, this is the San Francisco-based AI company’s first donation to the open community. It is claimed that the two new models, called gpt-oss-120b and gpt-oss-20b, perform similarly to the o3 and o3-mini variants. The company claims that these AI models, which are based on the mixture-of-experts (MoE) architecture, have undergone extensive safety testing and training. These models’ open weights can be downloaded from Hugging Face.

Native Reasoning is Supported by OpenAI’s Open-Source AI Models

OpenAI CEO Sam Altman announced the models’ availability on X (previously Twitter), emphasizing that “gpt-oss-120b performs about as well as o3 on challenging health issues.” Notably, interested parties can download and locally execute the accessible open weights for both models, which are presently hosted on OpenAI’s Hugging Face listing.

According to OpenAI’s website, these models can be used with agentic workflows and are compatible with the company’s Responses application programming interface (API). Additionally, these models facilitate the usage of tools like online search and Python code execution. The models also exhibit transparent chain-of-thought (CoT) with native reasoning, which can be tuned to either prioritize low latency outputs or high-quality responses.

To limit the amount of active parameters for processing efficiency, these models are based on the MoE architecture. Each token can activate 5.1 billion parameters with the gpt-oss-120b and 3.6 billion parameters with the gpt-oss-20b. There are 117 billion parameters in the former and 21 billion in the latter. A content length of 1,28,000 tokens is supported by both models.

Mostly English-language text databases were used to train these open-source AI models. The organization prioritized general knowledge, coding, and STEM (science, technology, engineering, and mathematics) subjects. OpenAI employed fine-tuning based on reinforcement learning (RL) in the post-training phase.

The majority of the text databases used to train these open-source AI models were written in English. Code, general knowledge, and STEM (science, technology, engineering, and mathematics) subjects were the company’s main areas of interest. OpenAI employed reinforcement learning (RL)-based fine-tuning in the post-training phase.

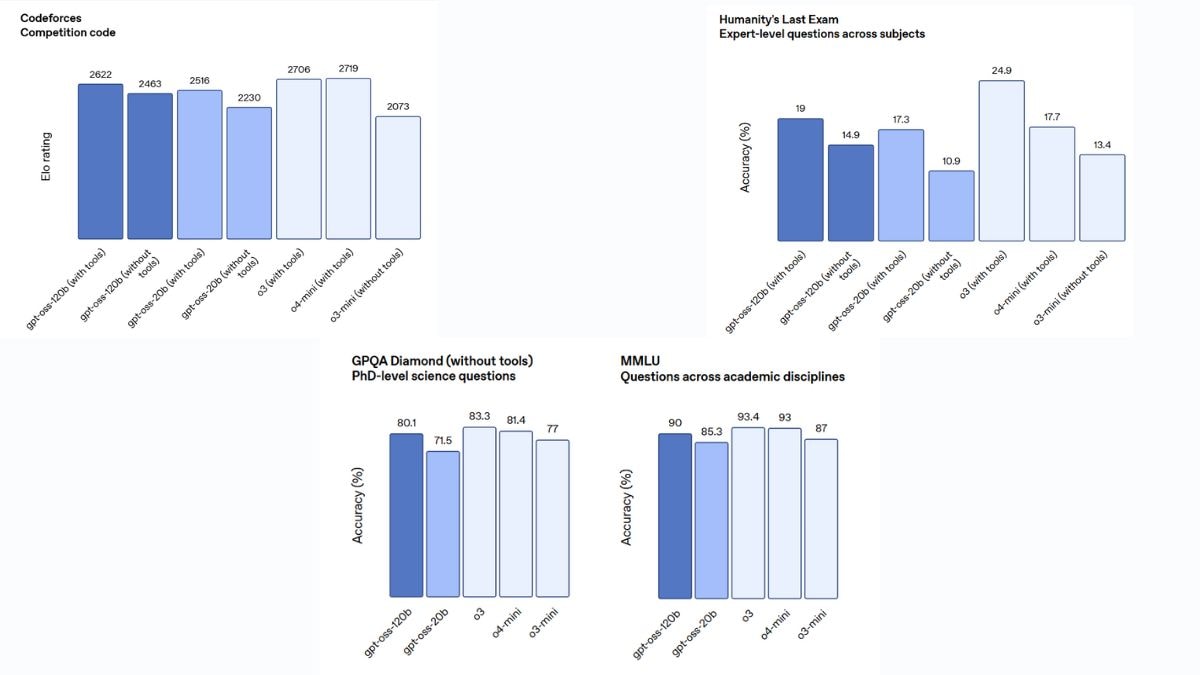

Comparison of the open-source OpenAI models’ performance Image Source: OpenAI

Comparison of the open-source OpenAI models’ performance Image Source: OpenAI

According to internal testing conducted by the firm, gpt-oss-120b performs better than o3-mini in tool calling (TauBench), general problem solving (MMLU and Humanity’s Last Exam), and competitive coding (Codeforces). However, on other benchmarks like GPQA Diamond, these models generally perform just slightly worse than o3 and o3-mini.

These models have received extensive safety instruction, according to OpenAI. The business eliminated dangerous information on chemical, biological, radiological, and nuclear (CBRN) hazards during the pre-training phase. The AI company added that it employed particular methods to guarantee the model is shielded from prompt injections and rejects harmful suggestions.

OpenAI asserts that even though the models are open-source, they have been developed so that a malicious actor cannot alter them to produce undesirable results.

For breaking news and live news updates, like us on Facebook or follow us on Twitter and Instagram. Read more on Latest Technology on thefoxdaily.com.

COMMENTS 0